Jia (Kevin) Liu,

Associate Professor of Electrical and Computer Engineering, The Ohio State University

|

|

|

|

|

|

|

My research focuses on convex/non-convex optimization, algorithm designs, applied probability, stochastic control, and their applications in the areas of Theoretical Machine Learning, Cyber-Physical Systems (CPS), Internet-of-Things (IoT), Data Analytics, Communication Networks, Cloud Computing, Network Economy, etc. These systems can all be modeled as stochastic networks of many interacting agents sharing and competing limited resources at different time-scales and stochastic dynamics. My goal is to understand fundamental performance limits of such systems, and develop efficient, adaptive, low-complexity, and scalable algorithms for diverse applications in these systems. To that end, I am taking an interdisciplinary effort spanning computer science, electrical engineering, and operations research. My research utilizes and contributes to machine learning, artificial intelligence, network science, stochastic control theory, queueing theory, optimization theory, and low-complexity algorithm design. In what follows, I give an overview of my research contributions in recent years (with representative publications), which are (loosely) caegorized into two main areas i) Theoretical Machine Learning and ii) Stochastic Network Optimization Theory. |

|

I. Theoretical Machine Learning |

|

|

|

|

|

|

|

1) Preference Learning: Sample Complexity Upper and Lower Bounds

Preference learning is a foundational subfield of machine learning, which unifies many important problems in supervised, semi-supervised, and unsupervised learning as special cases. Simply speaking, typical tasks of most preference learning problems are focused on maxing, selection, ranking, etc. For example, many classification problems in supervised learning can be veiwed as ranking the most favorable/probable labels. As another example, many pure-exploration problems in the classical multi-armed bandit framework (MAB) in online sequential decision making and statistical leanring can be viewed as ranking or selecting the most favorable set of arms, etc. Although ranking/sorting/selection algorithms in determnistic settings have long been the pillars of algorithm designs in computer science, many optimal ranking/sorting/selection preferential algorithm designs under noisy sampling/comparisons remain wide open. Meanwhile, ranking from noisy comparisons/samplings is a canonical problem that has found applications in various areas, such as social choices, web search, crowd sourcing, online recommendation systems, communication networks, etc. In our preferential learning research, we focus on active (adaptive) learning, where the learner adaptively chooses items to compare based on previous comparison results/history, and returns a ranking when having enough confidence. The goal of our preferential learning research is to develop ranking/sorting/selection algorithms that generate correct rankings with high probability and provable optimal sample complexity. Our results in this area have appeared in top machine learning venues, e.g., NeurIPS [1], ICML [2], AISTATS [3]. Publications:

2) Efficient Distributed / Decentralized / Federated Optimization for Machine Learning

From its early days as a discipline, machine learning has made extensive use of optimization formulations and algorithms. Mathematically, the training process of a machine learning task is typically manifested as solving a large-scale non-convex optimization problem. However, fueled by large-scale and over-parameterized (deep) learning models, recent years have witnessed an ever-increasing need for computing power. However, with the miniaturization of transistors nearing the limit at atomic scale, it is projected that the celebrated Moore's law will end in the next few years. Consequently, to sustain the rapid growth for machine learning technologies in the post-Moore's-Law era, the only viable solution is to exploit parallelism at and across different spatial scales. Indeed, the recent success of machine learning research and applications is due in a large part to the advances in multi-core CPU/GPU technologies (on the micro chip level) and networked cloud computing (on the macro data center level), which enable the developments of highly parallel and distributed algorithmic architectures. However, developing efficient and effective distributed/decentralized/federated algorithms is highly non-trivial and many algorithms design challenges quickly arise to address various performance issues. In this research, we focus on several key efficiency performance metrics that affect the future prospects of distributed/decentralized learning, such as communication-efficiency, communication complexity (convergence speed), sample complexity, etc. We also pay attention to low-complexity desgins (e.g., asynchrony) and their impacts on the above mentioned efficiency metrics. So far, our results in this area have appeared in top venues in networking and control, e.g., ICLR [1], IEEE INFOCOM [2,3,4], ACM MobiHoc [5], IEEE CDC [6]. Publications:

3) Multi-Armed Bandit (MAB) Problems Arising from Real-World Applications

The multi-armed bandit (MAB) modeling framework has been widely adopted for studying many online sequential decision optimization problems (network resource allocation, ad placement, crowdsourcing, etc.) with unknown parameters. The goal of the decision maker is to maximize the cumulative reward in the face of uncertainty, or equivalently, to minimize the gap (often referred to as regret) compared to an offline optimal solution. However, the basic MAB model neglects many important factors of the system in real-world applications. For example, in wireless network scheduling where multiple seemingly feasible scheduling decisions can be chosen, their corresponding underlying wireless channel could be unavailable (i.e., an arm could be "sleeping"). Also, in the basic MAB model, there is a lack of fairness modeling, which often arises in many applications (e.g., task assignment in crowd-sourcing, online ad placement, etc.). Therefore, how to develop algorithms to minimize regret while guaranteeing fairness becomes highly non-trivial. As another example, in many online recommender systems, crowd-sensing, and e-commerce sites, the agent cannot determine which arm(s) to pull and has to rely on users/customers, who need to be incentivized. Also, the users could exhibits various complex features, e.g., biases in preference towards arms, self-reinforcing behaviors (i.e., certain types of users receiving rewards could lead to more users of the same type to arrive), etc. In these cases, developing good incentive mechanisms to achieve low regret with low incentive payments are highly desirable. Indeed, our research interests in MAB are all motivated from such real-world problems. Publications:

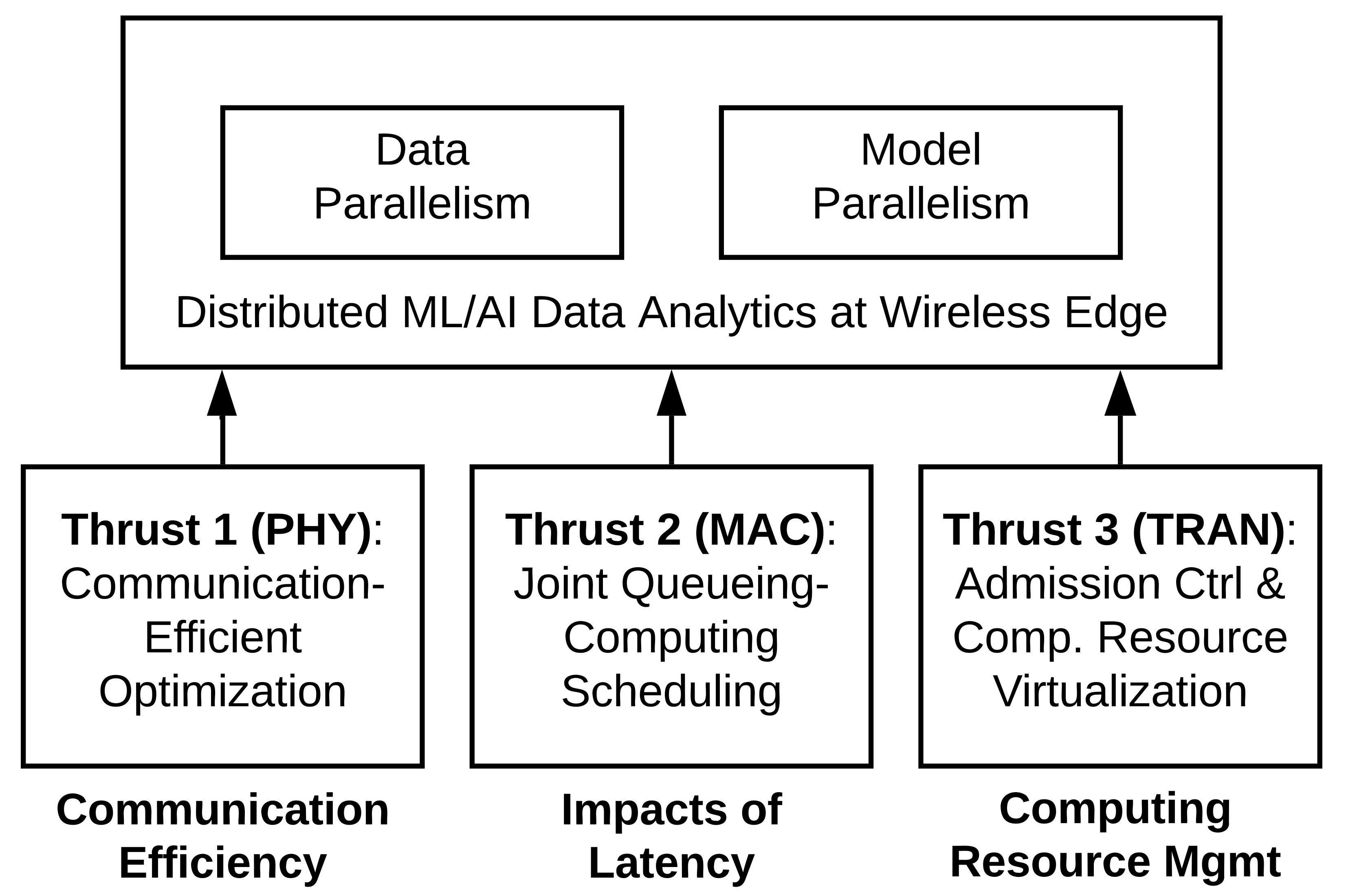

4) Computing-Networking Co-Designs for Machine Learning and Artificial Intelligence

In recent years, machine learning (ML) and artificial intelligence (AI) applications are quickly finding their ways into our everyday life, including healthcare, automotive, retail, smart home, just to name a few. All these applications generate and inject extreme volumes of data into the network for a wide range of complex ML/AI data analytics tasks, including but not limited to the trainings and/or inferences in computer vision, natural language processing, recommendation systems, etc. Unfortunately, most of the existing wireless network control and optimization algorithms rarely take the new characteristics of ML/AI data analytic traffic into considerations. Likewise, most ML/AI data analytics algorithms oversimplify the underlying wireless networks as "bit pipes" and ignore their complex networking constraints (e.g., channel path-loss and fading, spectrum access, scheduling for interference mitigation, routing, congestion control, etc.), hence leading to poor overall data analytics efficiency. Hence, without jointly considering computing-networking co-designs, traditional network control and optimization algorithms are no longer sufficient to guarantee good throughput and latency performances for these distributed, data-intensive, and iteratively computational ML/AI analytics traffic. The overarching theme of this research program is to bridge the gap between the rapidly growing ML/AI data analytics demands and the existing network control and optimization technologies. The specific goals of this research are three-fold: (1) develop new computing-aware networking control and optimization algorithms across the protocol stack to support good performance in both computing (e.g., convergence speed, learning/inference accuracy, etc.) and networking (e.g., throughput and latency, etc.); (2) contribute to new designs of distributed ML/AI data analytics to meet the actual wireless edge network constraints (e.g., channel fading, interference, computing resource limits); and (3) implement and evaluate the proposed theoretical results by developing prototypes and testbed for real-world data analytics. Notably, this research has received the prestigious NSF CAREER Award [1]. Publications:

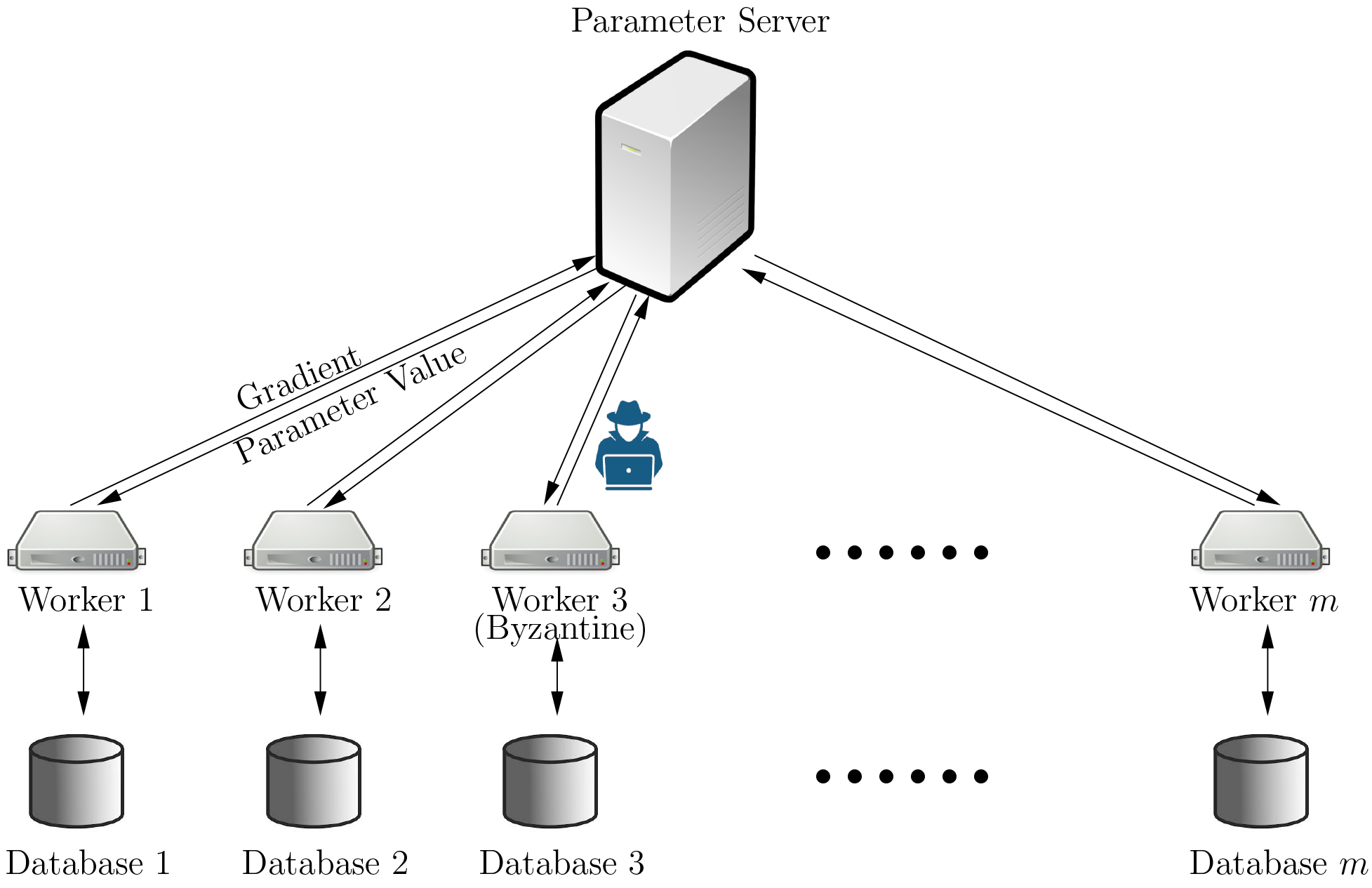

5) Privacy, Security, and Robustness of Machine Learning Algorithms

The proliferation of machine learning in recent years have led to a dramatic increase of computation power needs and necessitaes large-scale distributed learning deployments in both data centers and edge computing environments. However, the use of distributed learning also opens up the potential of various adversarial cyber-attacks. For example, it has been shown that the gradient information exchange in distributed learning in edge networks could leak a substantial amount of private information. Also, it has been shown that there exists a fundamental trade-off between privacy protection and learning accuracy. In [11], we have developed a private and communication-efficient decentralized gradient descent based learning algorithms that significantly improves the privacy-accuracy trade-off over the state-of-the-art. In addition, a serious problem in SGD-based distributed optimization methods is that they are prone to the so-called Byzantine attacks, where a malicious worker machine returns arbitrary information to the parameter server. It has been shown that, under Byzantine attacks, even a single erroneous gradient can fail the whole learning system and causing the classical distributed SGD algorithm to diverge. In our recent work [12], we have proposed a new Lipschitz-inspired coordinate-wise median approach for Byzantine-resilient SGD-based distributed learning. In addition to privacy and Byzantine attacks, the risk of data poisoning attacks has also been rapidly increasing in various machine learning systems. For example, in our recent work [13,14], we have found that it is quite easy to attack association-rule-based, graph-based, and matrix-factorization-based recommender systems by injecting malicious users and manipulated data. In light of the rapid growth of machine learning systems and applications, there is a compelling need to design private, secured, and robust machine learning systems. So far, our results in this area have appeared in top venues in cyber-security, applied AI, control, e.g., NDSS [2], ACM WWW [1,5], IEEE CDC [4], ACM ACSAC [6]. Publications:

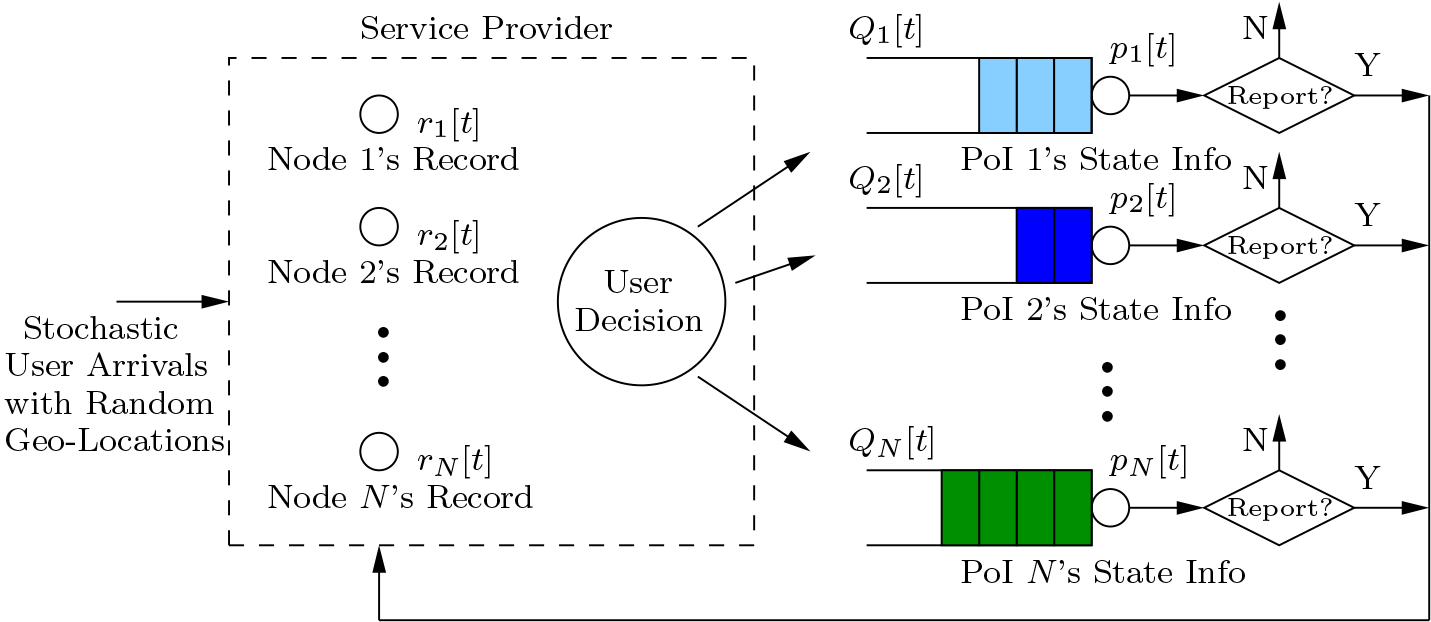

6) Achieving Data Freshness Guarantee in Crowd-Learning

The proliferation of smart mobile devices has spurred an explosive growth of mobile crowd-learning services, where service providers rely on the user community to voluntarily collect, report, and share real-time information for a collection of scattered points of interest. A critical factor affecting the future large-scale adoption of such mobile crowd-learning applications is the data freshness of the crowd-learned information, which can be measured by a metric termed “age-of-information” (AoI). However, in our work [1,2], we show that the AoI of mobile crowd-learning could be arbitrarily bad under selfish users’ behaviors if the system is poorly designed. This motivates us to design efficient reward mechanisms to incentivize mobile users to report information in time, with the goal of keeping the AoI and congestion level of each PoI low. In [1,2], we consider a simple linear AoI-based reward mechanism and analyze its AoI and congestion performances in terms of price of anarchy (PoA), which characterizes the degradation of the system efficiency due to selfish behavior of users. Remarkably, we show that the proposed mechanism achieves the optimal AoI performance asymptotically in a deterministic scenario. Further, we prove that the proposed mechanism achieves a bounded PoA in general stochastic cases, and the bound only depends on system parameters. Particularly, when the service rates of PoIs are symmetric in stochastic cases, the achieved PoA is upper-bounded by 1/2 asymptotically. In our follow-up work [20], we further extend this crowd-learning framework to consider the impacts of user arrival prediction. Notably, this research has received the prestigious Google Faculty Research Award. Publications:

|

|

II. Stochastic Network Optimization Theory |

|

|

|

|

|

|

|

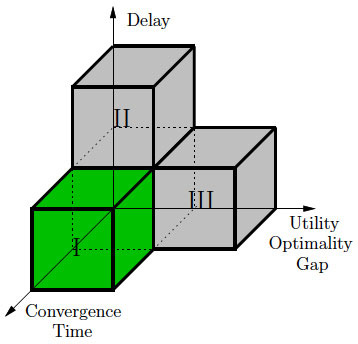

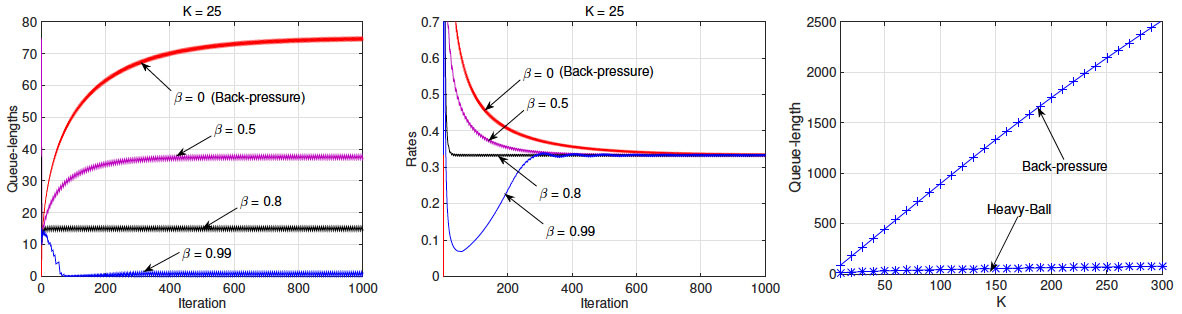

1) Momentum-Based Stochastic Network Optimization

The growing scale and complexity in modern cyber-physical systems and networks necessitate the designs of distributed scheduling and control algorithms that can operate based on locally observable information. To date, most of the standard distributed approaches are based on the Lagrangian dual decomposition framework and the (sub)gradient descent method, which suffer large queueing delay and slow convergence. To address this problem, I have developed a general theory and a series of momentum-based fast-converging and low-delay distributed control and optimization algorithms that leverage momentum information to offer orders of magnitudes of improvements in both queueing delay and convergence speed. Moreover, my momentum-based approach reveals an elegant and fundamental three-way performance control relationship between throughput, delay, and convergence speed, which is governed by two control degrees of freedom. This new momentum-based stochastic control and optimization theory includes two related approaches: i) Heavy-Ball approach [1], and ii) Nesterovian approach [2], which have been published top venues IEEE INFOCOM 2016 and ACM SIGMETRICS 2016, respectively. Moreover, the Heavy-Ball approach won the IEEE INFOCOM 2016 Best Paper Award. Based on the results in [1, 2], I have received a three-year NSF grant from the CIF program [3] as the Sole PI. This research has also been supported in part by AFRL 2015 Visiting Faculty Research Program at the Information Directorate (AFRL/RI) and 2016 AFOSR Summer Faculty Fellowship Program (SFFP) award.

Publications:

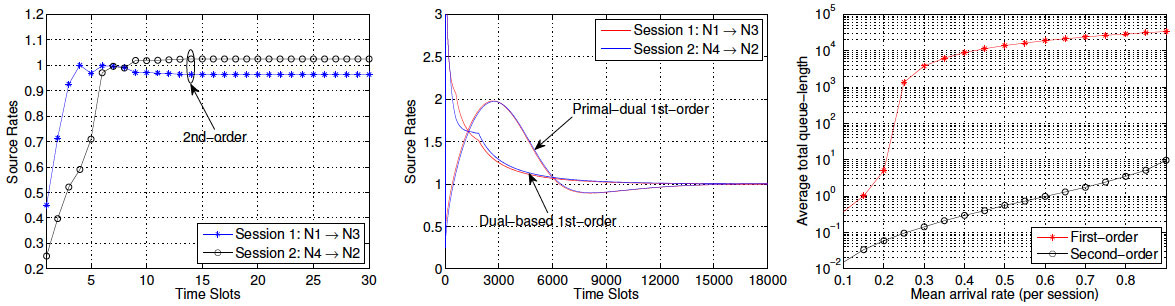

2) Second-Order Distributed Stochastic Network OptimizationEncouraged by the promising results in the momentum-based distributed stochastic control and optimization, I went one step further to ask the following questions: i) Can we further increase the convergence speed and reduce delay? ii) Is it possible to do better than the three-way performance trade-off? It turns out that both answers are "yes" and the key in answering these questions is to exploit full second-order Hessian information (SOHI). The basic philosophy of my SOHI-based approaches is to exploit not only first-order gradient information but, more importantly, second-order Hessian information in designing distributed optimization algorithms. So far, results in this direction have appeared in IEEE/ACM Transactions on Networking [3], ACM Sigmetrics Performance Evaluation Review [4], IEEE INFOCOM'12 [1], and IEEE INFOCOM'13 (Best Paper Runner-up Award, 1600+ submissions, acceptance rate 17%) [2]. Preliminary results in this direction have also played a key role in helping me to receive my sole-PI'ed NSF grant from the CIF program. My work contributes to a new and exciting paradigm shift in cross-layer network design that is evolving from first-order to second-order methods. I expect the outcomes of this research to provide a much needed comprehensive analytical foundation, new theoretical insights, novel control and optimization algorithms, as well as practical network protocol designs that will significantly advance our understanding of tomorrow's complex systems.

Publications:

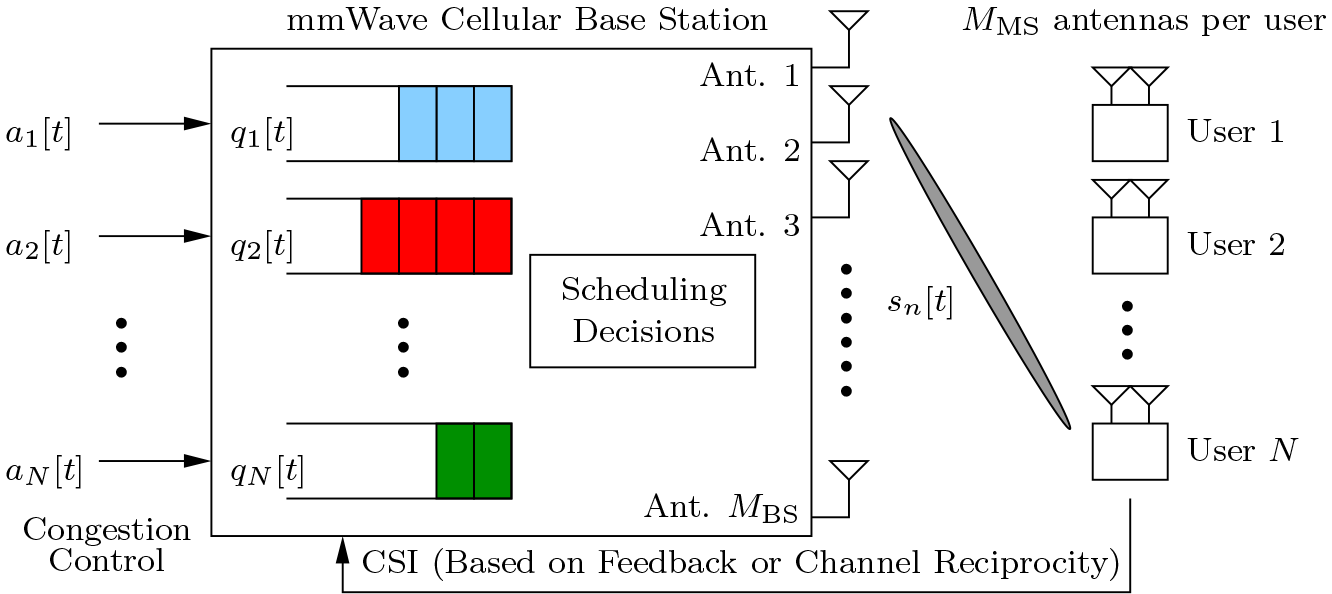

3) Millimeter-Wave and Massive MIMO Network Control and Optimization

To alleviate the rapidly increasing mobile data demands, in recent years, directional networking and communications (e.g., millimeter wave technology, massive MIMO systems, etc.) have emerged as enabling technologies for building the next generation mobile data networks and beyond. The excitements of directional networking are primarily due to: i) the rich unlicensed spectrum resources in millimeter wave bands; ii) the ease of packing large antenna arrays into small form factors; and iii) a much simplified interference management thanks to the highly directional signals. However, in spite of all of these promising progresses, the existing research efforts on directional are mostly concerned with problems at the physical layer or signal processing aspects. The understanding of how directional networking could affect the performance of network control, scheduling, and resource allocation algorithms remains limited in the literature. In this research, my goal is to fill this gap by conducting an in-depth theoretical study on the interactions between directional wireless communications technologies at the physical layer and network control and optimization algorithms at higher layers, as well as their impacts on queueing delay and throughput performances. Results of this research has appeared in IEEE JSAC'19 [1] and IEEE JSAC'17 [2]. Based on the preliminary results in [2], I won two three-year NSF grants the Sole PI [3] and Lead PI [4], respectively. Publications:

4) Network Economy: Trip-Vehicle Matching and Route Optimization in Ride-Sharing Systems

Spurred by increasing fuel shortage and environmental concerns, ride-sharing systems have attracted a great amount of attention in recent years. Lying at the heart of most ride-sharing systems is the problem of joint trip-vehicle matching and routing optimization, which is highly challenging and results in this area remain rather limited. This motivates us to fill this gap in our recent research [1]. Our contributions in this research are three-fold: i) We propose a new analytical framework that jointly considers trip-vehicle matching and optimal routing; ii) We propose a linearization reformulation that transforms the problem into a mixed-integer linear program, for which moderate-sized instances can be solved by global optimization methods; and iii) We develop a memory-augmented time-expansion (MATE) approach for solving large-sized problem instances, which leverages the special problem structure to facilitate approximate (or even exact) algorithm designs. Joint trip-vehicle matching and routing optimization is an under-explored problem. Future directions include more sophisticated models that incorporate uncertainties in riders’ arrivals and vehicles’ speeds, heterogeneous commute patterns, and their associated algorithm designs. Collectively, our results advance the state-of-the-art of intelligent ride-sharing and contribute to the field of sharing economy. Publications:

|

|

|

|

|

|

|

|

|

|

|

|

I gratefully acknowledge the ongoing support from NSF (CAREER CNS-1943226, ECCS-1818791, CCF-1758736, CNS-1758757, ECCS-2331104), DARPA YFA (D24AP00265), ONR, and AFOSR / AFRL (FA8750-18-1-0107, SFFP, VFRP awards, and the associated extension grants), as well as a variety of industrial grants including Cisco, Meta, Amazon, and a Google Faculty Research Award for my research.

|